Automakers are side-by-side in a road race to develop the world’s first fully autonomous vehicles. Manufacturers hope cars that can drive themselves will reduce accidents, improve traffic flow, and allow humans to escape mundane commutes. LiDAR and embedded vision are two vision technologies poised to increase safety in autonomous vehicles.

Automakers are side-by-side in a road race to develop the world’s first fully autonomous vehicles. Manufacturers hope cars that can drive themselves will reduce accidents, improve traffic flow, and allow humans to escape mundane commutes. LiDAR and embedded vision are two vision technologies poised to increase safety in autonomous vehicles.

LiDAR and Embedded Vision Improve Automobile Safety

The companies developing machine vision technologies for autonomous vehicles have a range of sensors to choose from. Two popular choices are LiDAR and optical cameras. Elon Musk has stood firm on computer vision as the sensor of choice for Tesla. A neural network analyzes the images from the cameras surrounding their cars to make driving decisions.

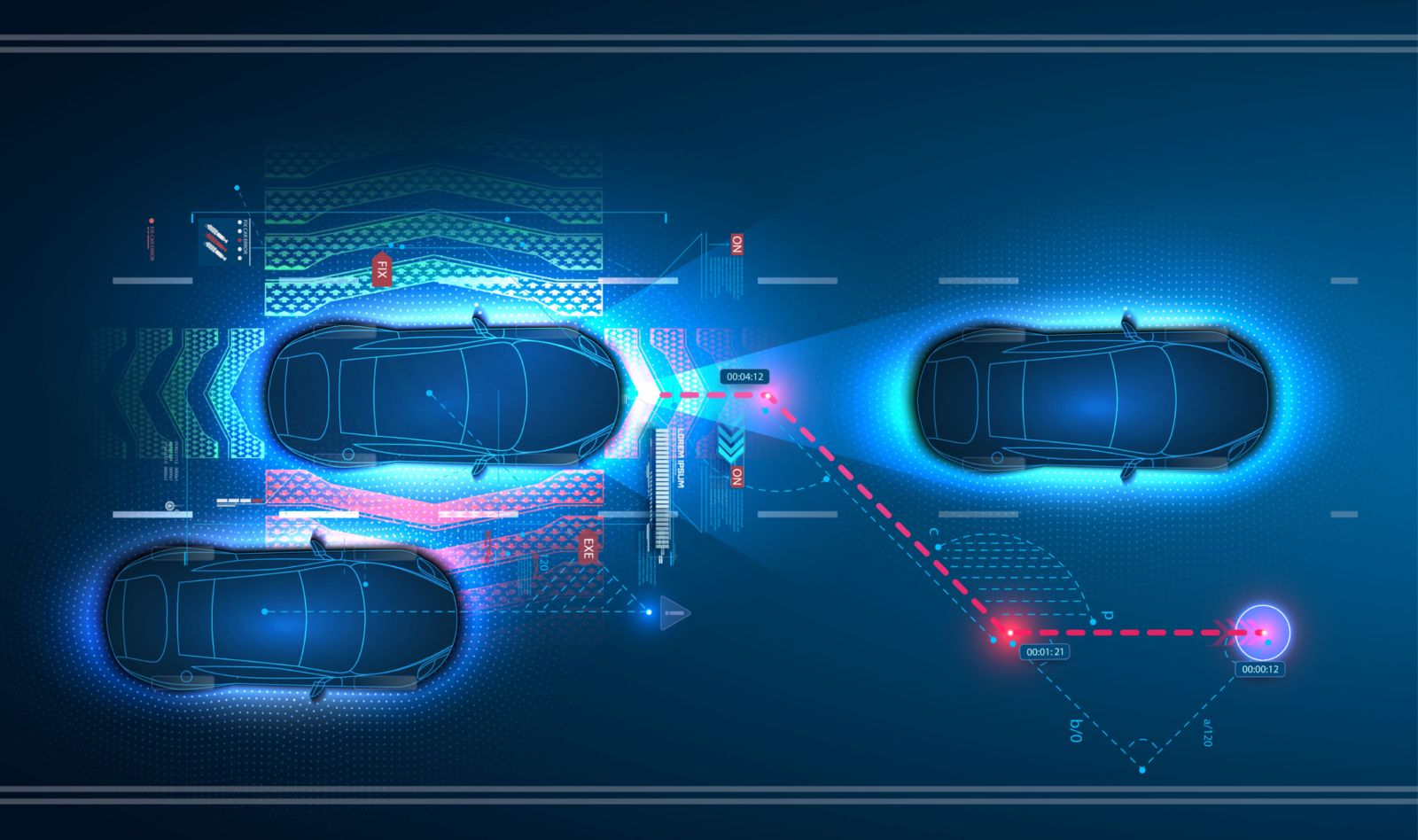

Camera data and complex algorithms come together to make up embedded vision systems. With these systems, the car can process much of the data on its own and make intelligent decisions in real-time. But keeping the cars connected is integral to collecting data and helping manufacturers craft updates that roll out automatically to the autonomous vehicles.

Others in the industry believe that LiDAR (Light Detection and Ranging) is the way to go. This system uses laser pulses to build a real-time 3D map of the vehicle’s surroundings. Although the cost of LiDAR is much higher than optics, there are challenges to overcome with cameras and the interpretation of the data they collect. LiDAR does a fine job of helping cars avoid collisions.

The Future of LiDAR and Embedded Vision in Autonomous Vehicles

Right now, it’s hard to say which technology will win the checkered flag and find itself the standard in autonomous vehicles. Perhaps it will be a combination of both embedded vision and LiDAR. The two technologies don’t need to compete; they can work together to provide a safer driving experience. Many on the side of computer vision argue that humans have been driving cars for over a hundred years without LiDAR.

However, the goal is to improve the safety of driving as vehicles become fully autonomous. Like humans, machine vision cameras struggle in poor driving conditions. Rain, fog, and night make visibility difficult. But LiDAR can map its surroundings in any situation or environment. And since optical cameras can better distinguish between objects and can more easily capture traffic signals and road signs without distraction, combining the two may be a win-win scenario.

Of course, machine vision cameras and sensors aren’t only used for autonomous vehicles. Purchase a camera or sensor from Phase 1 camera or sensor for your embedded vision project today!