Car manufacturers are trying to make automobiles safer than ever. Thanks to advanced driver assistance systems (ADAS), drivers and vehicles are able to anticipate and react to situations faster and smarter. Machine vision systems are central to the success of ADAS. Cars are learning to perceive the environment and respond autonomously or alert the driver.

How Machine Vision Works With ADAS

Machine vision gives technologies like automated systems the ability to “see” the environment they’re in. Capturing high-quality imaging is the first step. The machine vision system must then analyze what is going on. In real-time it must figure out if another vehicle is braking, a pedestrian is stepping into its way, or a change in road conditions. Then, the system has to make a decision. Does it need to brake, adjust speed, or adjust the direction of the vehicle?

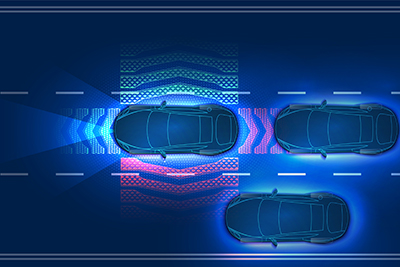

To make ADAS effective, automotive cameras must be set up to cover the front, read, and surround-view of a vehicle. Monocular and stereo cameras are used together to give the car a 3D view of its world. Because the vehicle must often react very quickly, CMOS sensors are preferred with their fast frame rate. ADAS algorithms then process image frames quickly to identify and avoid danger.

ADAS is most useful in the most challenging conditions. So manufacturers are developing systems that still function properly in poor weather, heavy traffic, or on dangerous roads. Machine vision cameras are robust and can stand up to conditions like thunderstorms, heavy snow, and bad lighting. RGB cameras integrate with LiDAR to form better predictions and react accordingly.

The Future of Machine Vision and ADAS

Although much advancement has been made with ADAS, many would argue that the technology still has a long way to go. Even a task like measuring distances between vehicles can pose a challenge for ADAS. Changing light conditions and obstructions make finding hazards difficult.

Growing the dataset for embedded vision algorithms is critical to the advancement of ADAS. Detecting road signs, pedestrians, and other vehicles may be easy for a human. But object variations and images captured from different angles and distances make training AI algorithms a challenge. Driving a car involves a complex set of perceptions and adjustments. No manufacturer has yet to release a fully autonomous vehicle that can make the right call every time.

In many ways, ADAS can be more advanced and more reliable than a driver. ADAS is never distracted by passengers, activities, or surroundings. ADAS cannot be impaired by drugs, alcohol, or exhaustion. ADAS doesn’t get road rage. And as manufacturers improve the AI, ADAS will outweigh the mistakes made by human error, making autonomous vehicles safer than those controlled by humans.

Want to add machine vision to your automated process? Let the experts at Phase 1 help you pick the machine vision camera you need.